Hey Snips!

Or how I made my child study multiplications tables thanks to Snips

OK, I will assume that you have already heard about voice assistants considering the buzz around them. Yes indeed Google, Amazon and Apple are dreaming to put in every home, car or office, their voice-activated services.

Thanks to their huge cloud services they are able to capture requests from every device and to deliver answers.

But, do you really know how they work ? And does this cloud-dependent concept is the only way to achieve the same kind of task ?

So we speak to a device. Mainly for two kinds of requests:

-

To interact with an online service: from asking the weather forecast for today to more complex task like what’s next in your agenda, looking for the best price for this or that.

-

To interact with your local environment: whether it’s to switch on light bulbs in your home or to manage your car’s infotainment system.

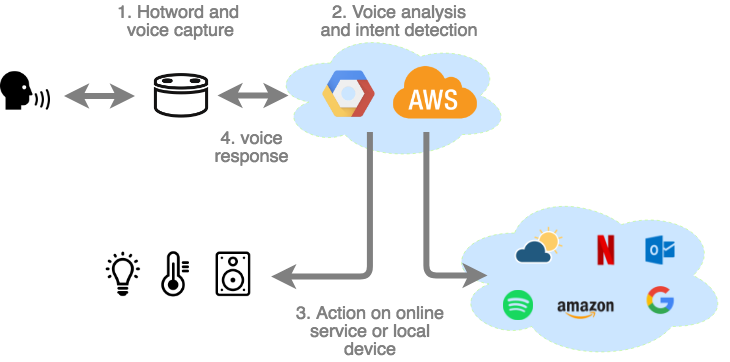

Cloud based

In both cases, with voice assistants like Google Home or Alexa, the device will have to reach a cloud-based service to analyse your request and decide what to do to give you back a result:

-

For the online service, your voice assistant’s provider will act as a proxy to transform your request into something that the online service will understand. Then it will transform the service response into a voice response.

-

For the local environment, it’s the same. But as we speak with local equipment, any of them will have to be somehow connected to the internet (directly, or with an intermediate hub, online service). As they need to receive, from the voice assistant’s provider, the required action you want to be done.

This approach is great for many reasons, but also come with some issues and fears. Let’s review the pros and cons.

Pros:

-

Google or Amazon are using their huge cloud resources to build a scalable and continuously enhanced IA service to analyse voice and extract the intents.

-

They have also built an app-store logic (Action on Google or the Alexa Skill console) that allows any developer to easily build/publish a bridge between voice assistants and their own services. Developers get the benefits of the cloud providers rich ecosystem and also of their worldwide reach.

-

Thanks to this app-store logic, out of the box these assistants are able to recognise a lot of things and to offer a lot of services.

Cons:

-

First, consumer is cloud-dependent. You need an internet connection. That obvious to say, but important to keep in mind. Especially when these services are becoming more and more important on a day to day basis like for home automation: no internet means no more “OK Google, switch on the light in the living room”. Also we can imagine other use cases that require to work in an environment where internet access is unreliable (industrial facilities, transportation, …).

-

Second is privacy. To be clear, after hotword detection, every single word you’re saying to your voice assistant is sent into the cloud and recorded. As a voice assistant owner you can have access to these records on the device configuration page (i.e. Alexa console) linked to your Google or Amazon account. Also, these records include anybody speaking around you (guests, kids, …) close enough from the device microphone. This lead us to privacy concern. Who has access to what and how long ?

-

Third, these products are still young. They do not know how to handle situations that seem to me very common: a physical voice assistant is always bound to a unique identity and not able to identify who is speaking and so switch to another user identity. This leads for example to the unpleasant situation with kids, or guest at home able to trigger any actions without the ability to restrict any usage (start YouTube, access to your agenda, …). This is a hot topic for Google and AWS, i.e. Alexa services are now available in a special version (Alexa for Hospitality) to address the Hotel industry.

OK… then ? What could be the alternatives ? With which drawbacks ?

No Cloud Dependency

Yes alternatives exist. But before to speak about alternatives, we need to understand what means the “voice analysis” concept I used before.

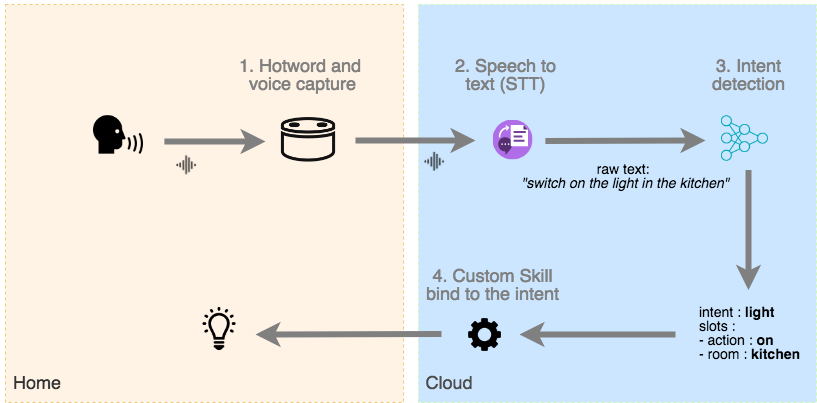

We can describe the process used by voice assistant to transform voice into intent with this pipeline:

-

Hotword and voice capture: the local device is configured to recognise a specific keyword that will trigger the voice recording and its transmission to the cloud.

-

Speech to text: this cloud service transforms a voice signal into text. This service is managed by the cloud provider, based on the machine learning algorithms.

-

Intent detection: either it’s a build-in intent (OK Google/Alexa what time is it?) or it’s a custom intent. Custom intents are configured in the cloud provider app-store logic. Again it’s based on a machine learning models. But as a developer you need to train it to recognise the sentences you want to manage. To do so you will have to use specific tools from the cloud provider (i.e.: Action on Google or the Alexa Skill console). This step can require time, as the quality of prediction will depend on a sufficient amount of training data.

-

Custom skill: here the developer will have to build its own business logic to achieve the specific task. The input is based on the list of intents for which we trained a machine-learning model. This element can be an external service that the developer will have to host and maintain. Or the developer can rely on the cloud provider ecosystem to build and execute the business logic (i.e. AWS Lambda).

Last, but not the least, this pipeline is always linked to one specific language. So if you to support different language you will to duplicate this pipeline for each language and retrain the intent detection model with the translated sentences.

Snips

OK, so if we want an alternative we need:

-

The same voice analysis pipeline and machine learning capabilities, but running without cloud dependency.

-

The ability to build our custom skills without cloud dependency

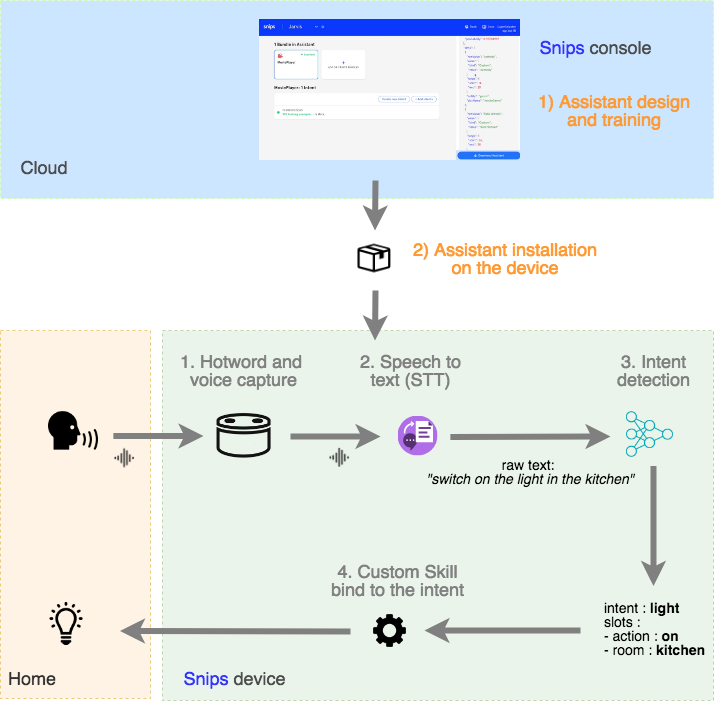

Snips is a start-up company that provide all of this:

-

Wake Word: built-in or custom hotword

-

Speech to Meaning: speech to text (ASR) and intent detection (NLU)

-

Dialog: a framework to develop your custom skill

All of these run on the device. Which means you can now build voice assistant with offline capability and guarantees privacy by design.

The only online features are the assistant design and the machine learning training step for intent detection, and done though the Snips console. This console offer an app-store logic to build, train your assistant, but also to share with other people you work or get the benefits of someone else work.

The outcome of this console is an assistant package that will run on your target device, without any dependency from their online services. Your voice stays on the device.

A Snips assistant run on Raspberry Pi, x86 Linux, Android and iOS.

As I write these lines they support English, French, German, Spanish, Japanese and Korean.

On the drawbacks, a Snips device will only recognise and handle intents configured in the assistance you have installed on the device. You will not have the full scope of services that you can get out of the box from Google or Amazon.

In the meantime, Snips for now is perfect to design assistants dedicated to a specific list of skills. The skills you want to have, not more. When you want to develop a specific business voice application, that’s enough.

OK let’s play with Snips

Learn multiplication tables, I do not have a good memory of that. And when my children got the age to learn them at school, I’ve seen the same symptoms. So we looked for some funny ways to help them learn these multiplication tables, with more or less success.

When I started to look at Snips, my kids were curious about their dad speaking to a computer. And I arrived quickly to this idea: let’s build a Snips app that will allow my kids to play and learn multiplication tables.

And indeed this was a good candidate: a multiplication tables quiz!

A simple quiz where the assistant asks your child a random multiplication result, expect a result and store the score of your child.

Let’s try it :)

OK, so what is the playground?

If you want to play with Snips here what you will need to start:

-

A compatible device. The easiest is a Raspberry Pi. But you can have a look in the official documentation: https://snips.gitbook.io/documentation/snips-basics/what-do-i-need/hardware)

-

A microphone and a speaker attached to the device

-

An account on the Snips console (https://console.snips.ai/signup)

One way to quickly start is to purchase a Snips maker kit (https://makers.snips.ai/kit/). Of course you can build your own by getting the components separately.

When you have the hardware, you need to install the Snips software on it. On Raspberry, you will need Raspian installation with a microphone and speaker properly configured. Then you will need to install a tool from Snips named “Sam” that will setup the required software and will allow you to select and download assistants linked to your Snips account directly on the device. Here the documentation links:

-

Operating system installation and configuration before Snips installation: https://snips.gitbook.io/documentation/installing-snips/on-a-raspberry-pi

-

Snips “Sam” command line installation: https://snips.gitbook.io/getting-started/installation

Let’s install the multiplication table quiz

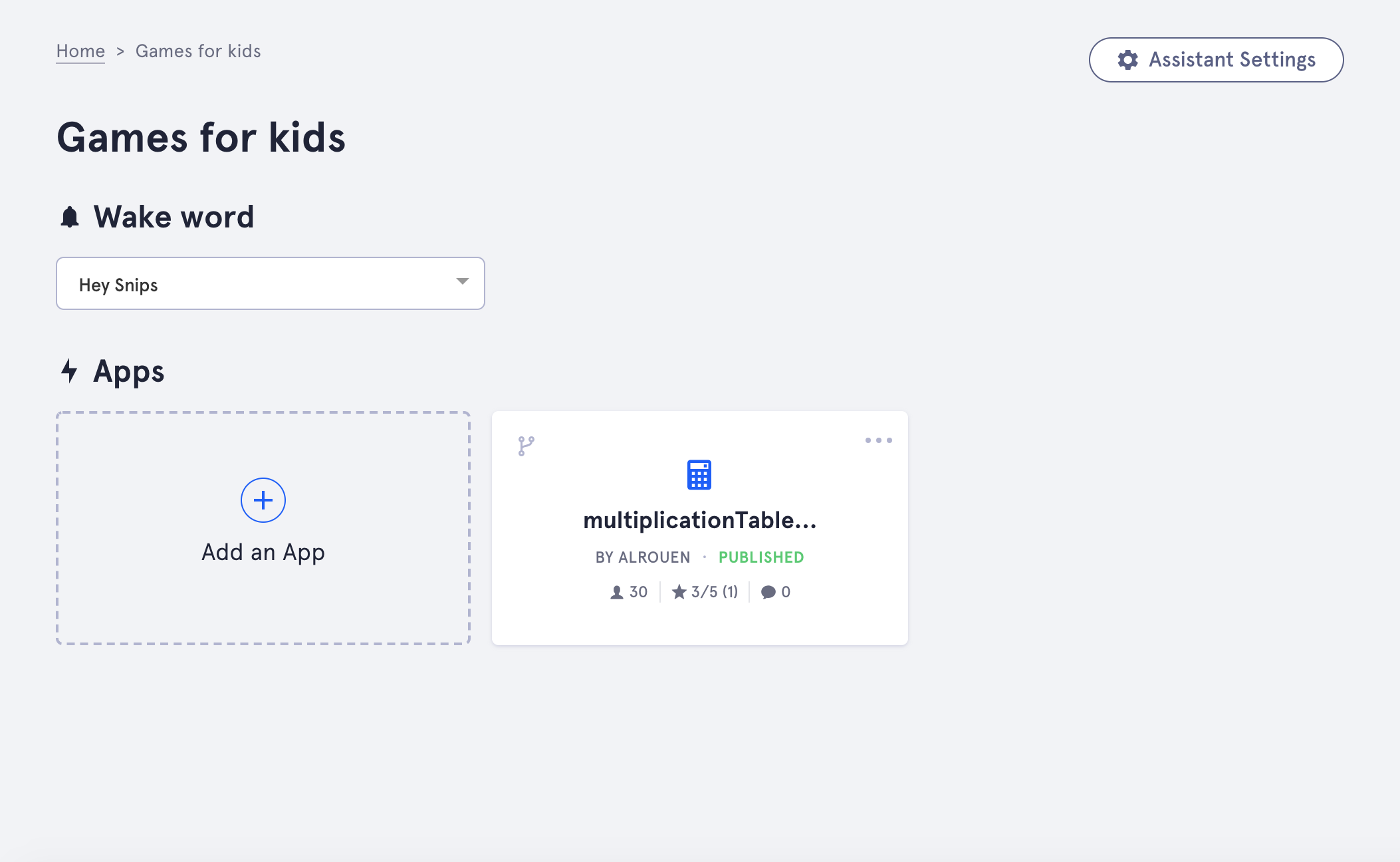

The first step to install an assistant, with Sam, is to create it in your Snips console. A Snips assistant is basically a set of skills you want to support in one specific language. The language cannot be changed after assistant creation so be careful.

After assistant creation, you will either add skills (Snips apps) made available by other users or build your own. In their app store, Snips allow you to browse, add, fork and publish, “Snips apps”.

Right now to use the multiplication tables quiz, we will just:

-

Create an assistant, in French language, as my Snips app is made for now French language (as my kids speak French)

-

Browse the store to add the Snips app named “multiplicationTableQuiz”

When it’s done you should have an assistant in the console that looks like:

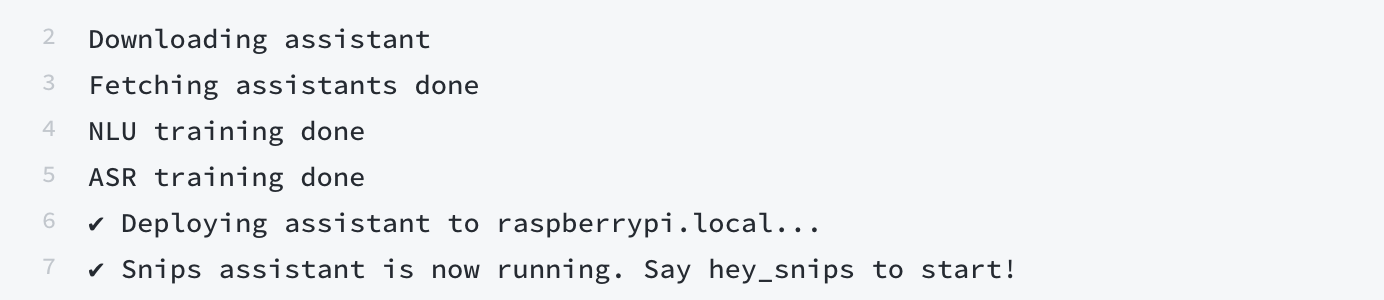

Then from the device, you just need to use the “Sam” command line to install this assistant.

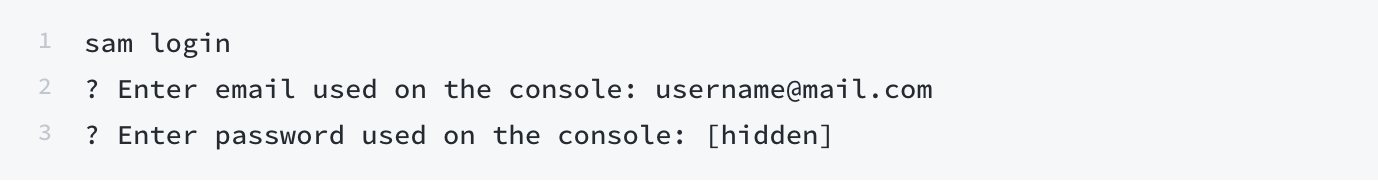

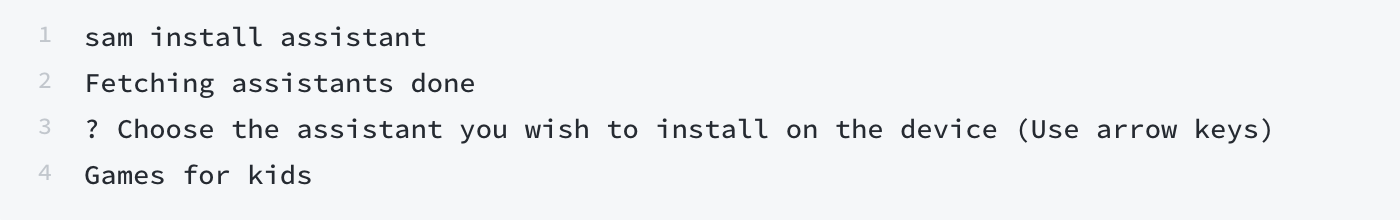

To do so, get access to your device command line (i.e. SSH) and use these commands:

- If not yet done get authenticated to your Snips account:

- Install the assistant:

The whole tutorial from Snips is here: https://snips.gitbook.io/getting-started/install-an-assistant

When it’s done you can start the game by saying:

Hey Snips, lance la table des deux

Or :

Hey Snips, on peut faire la table des dix ?

Have fun ;)

Assistant! App please

If you succeed in installing and play with this Snips assistant, that’s enough for now.

But later it could be cool to understand how it works behind the scenes, and then design, develop your own assistant with the skill you want. Let’s do in a next post!

That’s all folks !

Did you enjoyed it ? If so don’t hesitate to 👏 our article or Subscribe to our Innovation watch newsletter ! You can follow Smile on Facebook, Twitter & Youtube.